- Deepfakes are increasingly harder to detect, meaning they can be used to manipulate and alter people’s memories—a phenomenon known as the Mandela Effect.

. - Have you fallen under the Mandela Effect? Take our quiz and find out!

. - The rise of deepfakes has made it easier to create and spread misinformation and manipulate public opinion by altering people’s memories of past events.

. - Some experts warn that deepfakes could have a profound impact on society.

. - Mitigate these risks by learning how to spot deepfakes through tell-tale signs.

The internet has been rocked by some mind-boggling images lately. The pope in a puffer jacket and Vladimir Putin on bended knee kissing Xi Jinping’s hand. The catch? Neither of these events actually happened. They’re the product of advanced AI technology known as deepfakes.

These deep learning-based fakes represent how easily AI can blur the boundaries between reality and fiction, with significant and far-reaching implications.

[Safeguard your online activity and keep your personal information private with a VPN.]

We dive into how AI is changing the way we remember, and how deepfakes could potentially contribute to the phenomenon known as the Mandela Effect. Get ready to question everything you thought you knew.

Can deepfakes be spotted?

Yes, it is possible to spot a deepfake. While some deepfakes are so seamless they can fool even the most discerning eye, others leave subtle clues. Usually these clues relate to unnaturalness, ranging from the oddly placed shadow to obvious distortions.

However, some deepfakes are crafted with such skill that they’re nearly impossible to spot by eye alone. That’s where technology comes in. Several online tools and websites use sophisticated algorithms to analyze videos for signs of manipulation, and we’ll share more about them later. These tools can be helpful, but they’re not foolproof either. The best defense against deepfakes combines vigilance, critical thinking, and a healthy dose of awareness about the ever-evolving world of deepfakes.

How to spot deepfakes

In the wise words of deepfaked Abraham Lincoln: “Never trust anything you see on the internet.” It’s become essential for all of us to remain critical and skeptical of the information we consume and for technology companies and governments to work together to develop solutions to detect and prevent the spread of deepfake videos.

In the meantime, learn how to spot a deepfake to protect yourself from the spread of misinformation:

Facial distortions and transformations

- Look for lighting and 3D conversion distortions in the face. Deepfakes often struggle to seamlessly blend faces onto heads, leading to noticeable edges around the face, hair, or ears.

- Check for inconsistencies in texture, especially in cheeks, forehead, eyebrows, and facial hair.

Realistic eye and lip movements

- Check if shadowing, eye color, facial moles, and blinking movements look realistic. Natural blinking patterns are often difficult for deepfakes to replicate. Be wary of videos where the person doesn’t blink often enough or blinks in an unnatural way.

- Verify if the subject’s lips look natural and belong to that face.

Subject movements and gestures

- Pay attention to the movements and gestures of the subject in the video. Observe whether the head and body movements seem coordinated and natural. Deepfakes may have trouble aligning these movements seamlessly.

- Note if they appear awkward or too perfect.

Audio analysis

- Listen closely to the audio in the video. Pay attention to the tone, pitch, and cadence of the voice. Deepfakes may have trouble replicating a person’s voice perfectly, resulting in subtle variations or unnatural intonations.

- Check if the voice sounds artificial or has distortions, as it may be using AI-generated or altered voices.

Inconsistencies in lighting and reflections

- Look for inconsistencies in lighting and reflections that may not match the environment or the person’s position in the video or image. Deepfakes may have mismatched lighting or shadows that don’t fall naturally.

Verify the video content

- Find the original video or search for other examples of it to verify its content

- Use reverse image search with tools such as Google Image Search, TinEye, SauceNAO, or Bing Visual Search

Contextual analysis

- Evaluate the context in which the video was posted or shared, as it may indicate if it is genuine or not (e.g., why would the pope suddenly deviate from the typical regalia often worn in the papal household?)

- Check the original source of the video, whether it’s a reputable news organization, a random person on social media, or an individual with a questionable agenda.

Unnatural or uncommon situations

- Be skeptical of videos that show uncommon or unlikely situations, such as a famous person doing something out of character or a political figure saying something controversial.

Check metadata

- Check the metadata of the video to see if it matches the claims made in the video, such as the time and place of the recording

- Just keep in mind that metadata can be easily altered, so it should not be the sole basis for determining the authenticity of a video.

Stay updated

- Be aware that the technology used to create deepfakes is constantly improving, and it may become more challenging to spot them in the future

- Make sure you stay updated as best you can on new developments in this area and be cautious when sharing or reacting to videos that seem suspicious or extremely newsworthy.

What is the Mandela effect?

This term is named after Nelson Mandela, a South African anti-apartheid revolutionary and politician. Many people believed that he died in prison in the 1980s despite abundant proof that he didn’t. In reality, he was released in 1990, became president of the southern African country, and eventually passed away in 2013.

The Mandela Effect has since been used to describe various misremembered events or details, including the spelling of brand names, the lyrics of songs, the plot of movies or TV shows, and the details of historical events.

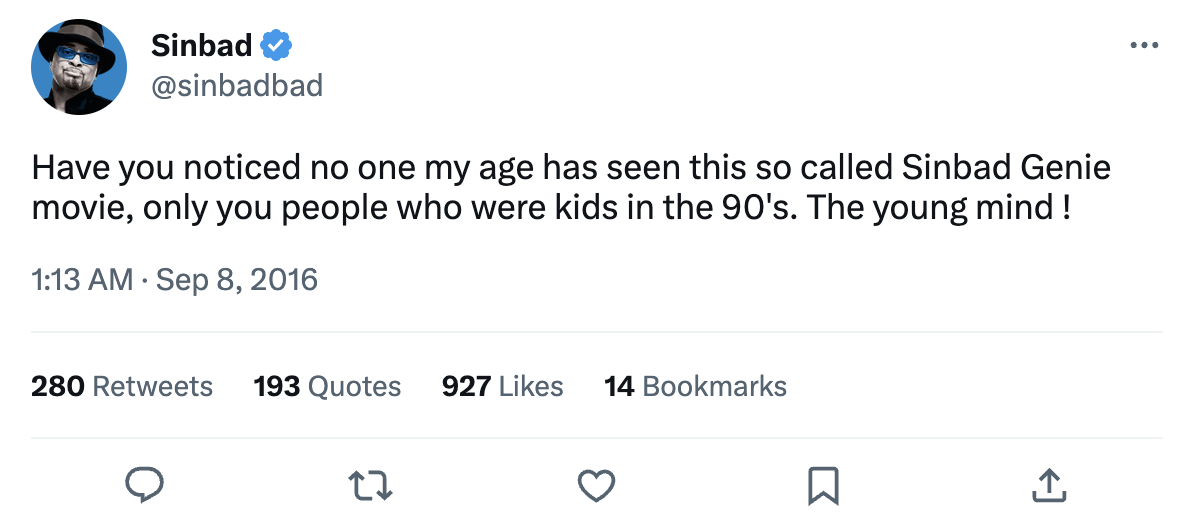

One example of the Mandela Effect in action involves the popular 1990s comedian Sinbad. Many people claim to remember a movie called Shazaam (not to be confused with the 2019 superhero movie, Shazam!), where Sinbad plays a genie who helps two kids. However, no such movie was ever made. The closest thing to it was a movie called Kazaam, which starred Shaquille O’Neal as a genie.

Despite the lack of evidence that Shazaam exists, many people remain convinced that they saw it and remember details about the plot, characters, and even what the movie poster looked like. Even though Sinbad himself has confirmed he’s never played a genie, the idea of the movie has become so ingrained in people’s memories that they are completely certain it’s out there.

Is your memory playing tricks on you? Test your knowledge with our Mandela Effect quiz:

What causes the Mandela Effect?

While the cause of the Mandela Effect isn’t fully understood, several theories attempt to explain it. One suggests that it results from false memories, where people remember events or facts incorrectly due to misinformation, misinterpretation, or the power of suggestion. Another more far-reaching theory is that it’s due to a glitch in the matrix or a parallel universe, where people may have experienced a different version of reality.

Psychologists think the Mandela Effect could be caused by how our brains are wired. Specifically, our minds can sometimes be influenced by things like what other people say or our own pre-existing beliefs, leading us to remember things incorrectly. This is called cognitive bias. For example, if many people on social media say that something happened a certain way, it can make us believe that it really did happen that way, even if it didn’t.

Although the Mandela Effect is not a recent occurrence and mostly relates to pop culture, the rise of deepfakes means that false information can spread even faster and easier and that more people might start remembering things that never actually happened.

This raises important questions about whether we can trust the information we see online, whether it’s okay to use AI to manipulate images and videos, and how much power technology should have in shaping our memories and beliefs.

The danger of deepfake-derived memories

Deepfakes use AI to create realistic-looking videos and images of people saying and doing things that they never actually said or did. The technology is based on deep learning, a type of machine learning that involves training artificial neural networks on large amounts of data.

As of 2019, there were fewer than 15,000 deepfakes detected online. Today, that number is in the millions—with the number of expert-crafted deepfakes continuing to increase at an annual rate of 900%, according to the World Economic Forum.

One of the most concerning aspects of deepfakes is their potential to be used for malicious purposes, such as creating fake news or propaganda or impersonating someone for financial gain. The technology is also being used to create deepfake pornography, which has raised concerns about the exploitation of individuals and the potential for abuse.

Deepfakes also have the power to make people believe they have seen something that never actually happened due to the highly realistic nature of the technology. This could ultimately cause them to misremember an untrue scenario as fact.

Here are a few examples of how deepfakes can contribute to the Mandela Effect and the dangers that come with them:

Creating fake news stories

Deepfakes could be used to create fake news stories that are so realistic that people believe them to be true, even if they’re completely fabricated. For example, false news stories such as terrorist attacks or natural disasters that have been created with the sole purpose of driving certain socio-political agendas. If these stories are created with deepfakes, they can be made to look like real news reports, complete with convincing video and audio footage. This could ultimately create a distorted view of history and events for future generations.

Swaying political opinion

A few months before the 2018 midterm elections, a deepfake video of Barack Obama calling then-president Donald Trump a bad name went viral. It was created by Oscar-winning director Jordan Peele to warn users about trusting the material they encounter online. Ironically, the video backfired after many of Trump’s supporters believed it to be real, with hordes of them taking to social media to express their outrage.

If a similar deepfake video of a political candidate or public figure saying or doing something that never actually happened was convincing enough, it could have detrimental effects on the functioning of democracy and the trust people have in institutions.

For example, as more and more people were exposed to a potentially convincing deepfake video of their country’s political candidates on social media or through various news outlets, false memories could spread and become accepted as true. This can swing who people vote for in elections, and who they nominate as their leaders.

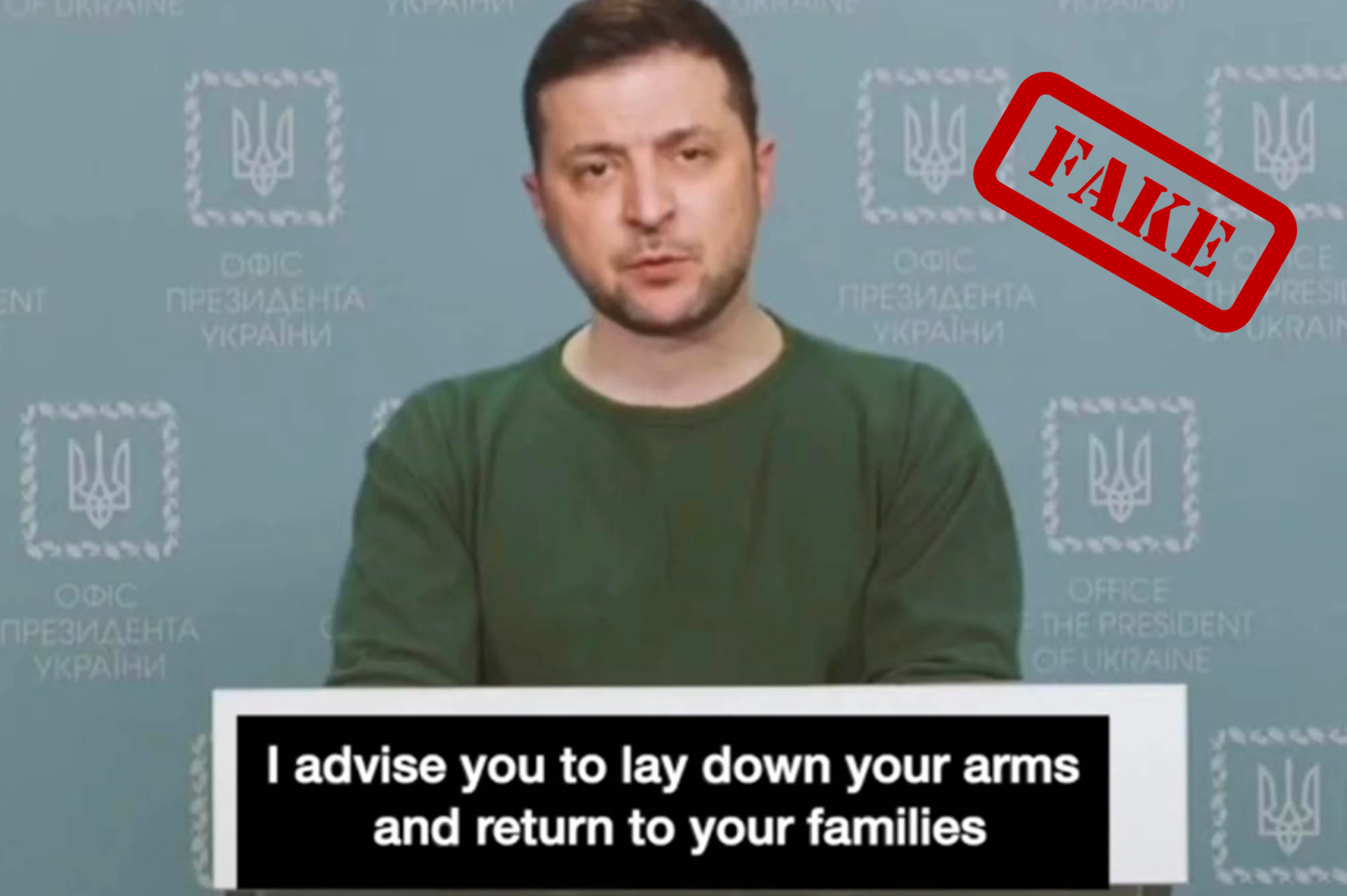

Driving propaganda campaigns

Propaganda campaigns created with deepfakes can be used to manipulate public opinion and spread disinformation about state-sponsored groups—ultimately leading to a distorted view of reality. For example, about the ongoing conflict with Russia, Ukrainian intelligence recently warned that a deepfake of Ukrainian President Volodymyr Zelenskyy was being circulated, in which he is seen calling for Ukrainians to surrender.

Despite many platforms taking the video down, it continues to pop up on several social media sites like Facebook, Reddit, and TikTok—sowing discord among the public and making it harder for them to discern the truth.

Altering historical footage

Deepfakes could be used to alter historical footage, such as footage of political speeches or important events in a way that changes people’s memories of what actually happened. This could lead to confusion and uncertainty about what actually happened in the past, eroding public trust in historical records and media—ultimately feeding into conspiracy theories.

Take the moon landing, for example. To this day, some people claim that it was faked and never actually happened. Deepfakes could be utilized to fabricate compelling videos and audio recordings that bolster this false narrative, intensifying skepticism and distrust in the authenticity of the historical event.

Manipulating social media content

With the ease of access to social media platforms, deepfakes could be used to create fake posts that make it appear as though celebrities, public figures, or influencers are present at an event or endorsing a particular product when in reality they’re not. By allowing false narratives to spread unchecked, deepfakes can further polarize society and create fertile ground for disinformation campaigns. This, in turn, undermines the credibility of social media as a tool for communication, which is critical for democracy and social cohesion.

Fabricating scientific evidence

From the origins of a virus to medical breakthroughs, deepfakes can be used to create fake scientific evidence to support an untrue claim or hypothesis. For example, some people try to spread disinformation about climate change despite overwhelming scientific evidence that it’s caused by human activity. This poses a threat to important issues like global warming.

Deepfakes can create false scientific evidence that claims climate change is caused by external factors, which can have serious implications; policymakers may be less likely to take action to address climate change if there’s widespread skepticism about its causes. This could further divide public opinion and make it harder to reach a consensus on how to address this urgent issue.

Creating false alibis

Additionally, deepfakes could be used to create fake confessions or statements in court cases or to manipulate security footage to create alibis for criminals. This could lead to false narratives being created around the events of the crime, with people believing a) that the perpetrator didn’t commit the crime or b) that an innocent person did. Over time, this false narrative could become ingrained in the collective memory of those involved in the case, with them believing it to be fact.

Hypothetically, let’s say there’s a high-profile criminal case where the defense team creates a highly realistic deepfake video showing the defendant in a different location at the time of the crime. The video is widely circulated, and many people come to believe that the defendant could not have committed the murder. Even if the video is later proven to be a deepfake and the defendant is found guilty, some people may continue to believe that the defendant was innocent due to their memory of the video.

Privacy should be a choice. Choose ExpressVPN.

30-day money-back guarantee

Comments

Took the tests, and failed miserably, but not because of polluted memory because I wouldn’t have known the answers to the questions anyway…not American. But it was an interesting article and no doubt there will be more and more deception in the future. 🙂

thanks for awesome content.keep sharing.

Excellent

Dear ExpressVPN and all who sail in her:

Please continue to provide continuous updates and information on deepfakes and other AI shenanigans. This is invaluable to remind everyone of the new world we are living in.

Obviously we are already in deep shit as a society and it’s getting deeper every day.

Eternal vigilance is the price of liberty. We need what ExpressVPN provides.